In this edition we’ll be covering…

Anthropic's potential $300B+ IPO plans for 2026

How to use Kling O1's unified video model through Higgsfield

A tutorial on implementing guardrails in OpenAI Agent Builder workflows

5 trending AI signals from Mistral, AWS, OpenAI, and more

3 AI tools to revolutionize your video creation and music production

And much more…

The Latest in AI

Claude’s Going Public (And OpenAI is Sweating)

Anthropic just kicked off what could become the biggest AI showdown of 2026. The Claude maker is reportedly preparing for one of the largest IPOs in tech history—and they're not taking their time.

According to reports from the Financial Times, Anthropic has engaged law firm Wilson Sonsini Goodrich & Rosati (the same firm that took Google, LinkedIn, and Lyft public) to prepare for a potential initial public offering as early as 2026.

The company has also been in preliminary discussions with major investment banks, though sources describe these talks as informal.

What's Coming?

Anthropic is simultaneously pursuing a private funding round that could value the company above $300 billion, including a combined $15 billion commitment from Microsoft and NVIDIA.

The AI startup is projecting to nearly triple its annualized revenue run rate to around $26 billion in 2026, up from current levels, with more than 300,000 business and enterprise customers already on board.

The move positions Anthropic in a direct race with OpenAI, which is reportedly laying groundwork for its own IPO that could value it at up to $1 trillion. OpenAI may file with securities regulators as early as the second half of 2026.

So What?

This is about establishing dominance in the enterprise AI market while valuations are still climbing. Three years ago, Google declared its own "Code Red" when ChatGPT launched. Now the tables have turned, and Anthropic is making its move while it has momentum with Claude's strong enterprise adoption.

Founded in 2021 by former OpenAI staff, Anthropic was recently valued at $183 billion and has positioned itself as a major rival through superior enterprise customer retention. The company's aggressive $50 billion AI infrastructure build-out in Texas and New York, plus tripling its international workforce, shows they're preparing for scale—whether public or private. The IPO filing might just be the official starting gun.

Tool Spotlight

Your Characters Finally Remember Who They Are

Kling AI just dropped what they're calling the world's first unified multimodal video model, and it's spectacular for video creators.

Unlike traditional tools that fragment your workflow between generation and editing, Kling O1 does it all in one place. You can generate cinematic videos from text or images, then edit, extend, or restyle them using simple conversation. Think of it as having a director's memory that remembers your characters and props across different shots.

Here's how you can start using it:

Head over to Higgsfield at https://higgsfield.ai/kling-o1-video or go directly to the Kling AI platform at https://app.klingai.com/global/omni/new

Upload your reference materials:

Add up to 7 images (minimum 300px resolution, max 10MB each in jpg, jpeg, or png)

Or upload one video clip (3-10 seconds, max 200MB, up to 2K resolution)

You can even create "Elements" by uploading multiple images from different angles (up to 4 images per element)

Write your prompt using this structure: [Detailed description of Elements] + [Interactions/actions between Elements] + [Environment or background] + [Visual directions like lighting, style, etc]

Generate your video—you'll receive high-fidelity output in seconds. You can create videos anywhere between 3-10 seconds to control your pacing.

Note: Kling O1 is currently available in Pro Mode only. Pricing is 8 credits/second without video input (40 credits for 5s, 80 for 10s), or 12 credits/second with video input (60 credits for 5s, 120 for 10s).

Upload reference images of your character, and Kling O1 will maintain their identity across shots, angles, and lighting conditions, solving one of AI video's biggest pain points.

🧠 You're reading this newsletter because you know AI matters…

But reading about AI ≠ actually using AI.

Here's the gap: You consume AI content, but you're not building meaningful products or workflows with it yet.

Inside our learning platform, you’ll have access to:

100+ hands-on lessons (not just theory)

A complete roadmap to take you from 0 → 1 with AI

Prompts you'll actually use today

Step-by-step automations for your workflows

(PLUS) weekly updates as AI evolves

(PLUS) 20+ new lessons released each month

All created by industry veterans. 3-day free trial.

Get Your Hands Dirty!

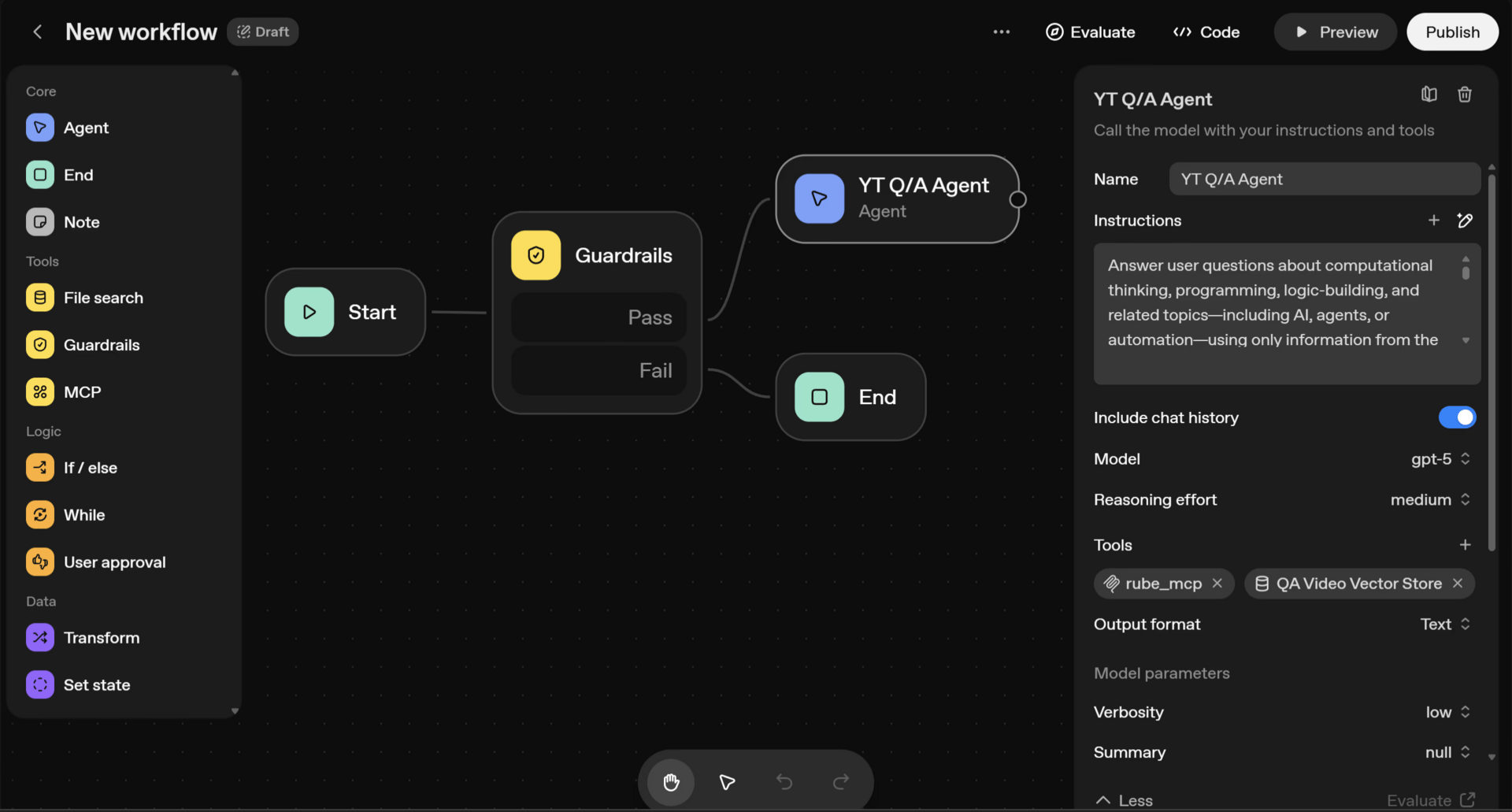

Teaching Your AI Agent Not to Be a Liability with Agent Builder

OpenAI's Agent Builder lets you create agentic workflows visually, but protecting your agents from misuse is crucial. Here's how to add guardrails using the platform's built-in safety features.

What Are Guardrails?

Guardrails are safety nodes that monitor agent inputs and outputs for unwanted content like personally identifiable information (PII), jailbreaks, hallucinations, and other forms of misuse. They work as pass/fail checkpoints in your workflow.

Here's how to implement them:

Log into the OpenAI Agent Builder at https://platform.openai.com/agent-builder and open your existing workflow or create a new one.

Add a Guardrails node after any agent node you want to monitor. Click the "+" button between nodes and select "Guardrails" from the Tool nodes section.

Configure your guardrail checks:

Select which types of content to screen for (PII detection, jailbreak attempts, hallucinations, etc.)

Define what happens when a guardrail fails: either end the workflow completely or return to the previous step with a reminder of safe use guidelines

Connect the output paths:

If the guardrail passes, continue to the next node in your workflow

If it fails, route to either an end node or back to the previous agent with corrective instructions

Test your guardrails thoroughly by intentionally triggering them with edge cases before deploying to production.

🔥 Pro tip: You can chain multiple guardrail nodes throughout your workflow to create layers of protection at different stages. For example, add one guardrail after user input to catch bad prompts early, and another after your agent's response to ensure safe outputs.

Quick Bites

Stay updated with our favorite highlights, dive in for a full flavor of the coverage!

AWS launched the Nova 2 model family in Amazon Bedrock, including Lite, Pro, Sonic (speech-to-speech), and Omni (first unified multimodal). Nova Forge lets you build custom models, while Nova Act creates browser-based agents with 90% reliability.

Mistral unveiled Mistral 3, featuring Mistral Large 3 (675B parameters) and the Ministral family (3B, 8B, 14B), all under Apache 2.0 license. Large 3 ranks #2 among open-source non-reasoning models on LMArena.

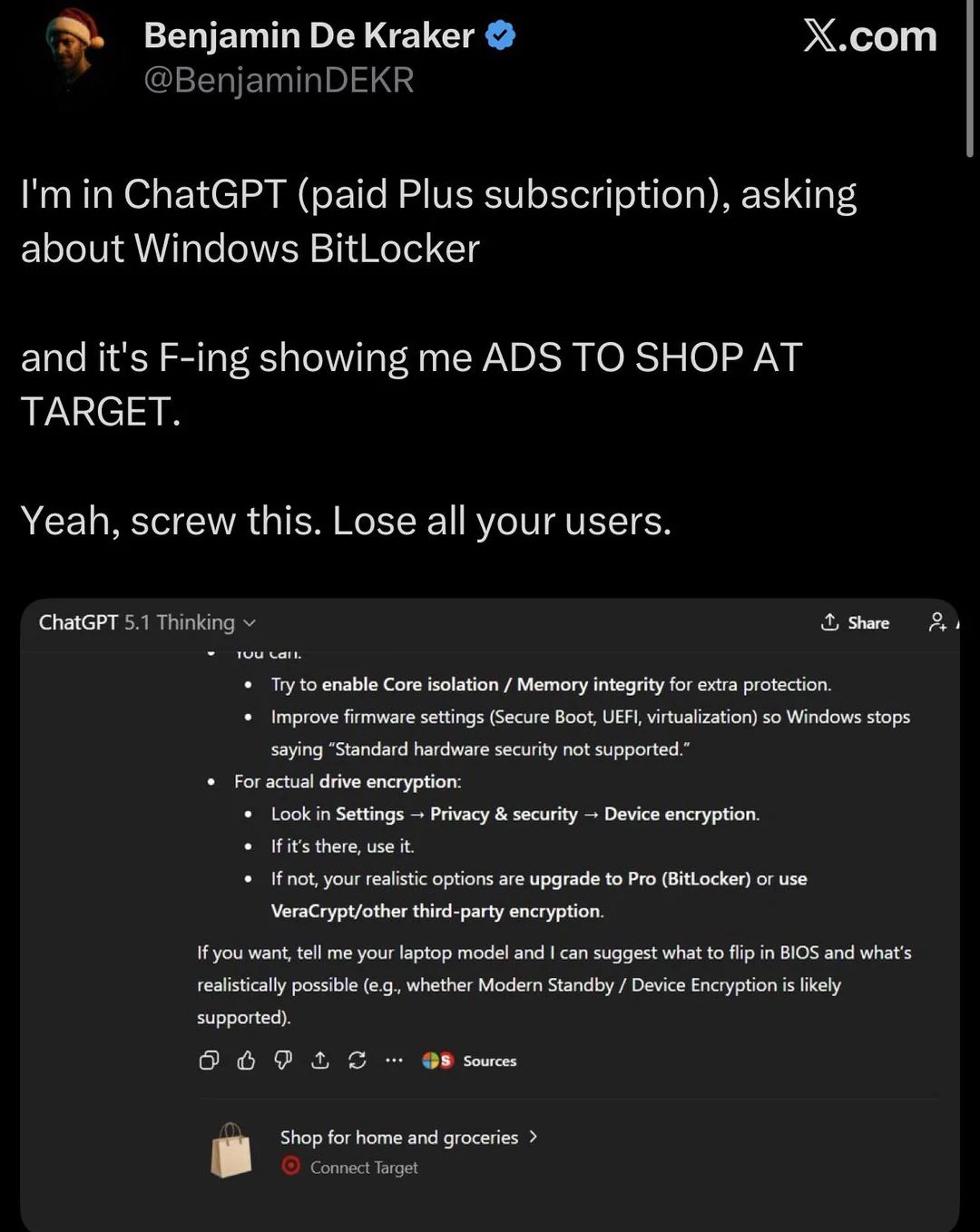

OpenAI's new reasoning model apparently launches next week and reportedly beats Gemini 3. Sam Altman declared "Code Red" internally, delaying ads and agent projects to compete with Google's chatbot growth from 450M to 650M users.

Virginia Tech is using AI to score college essays, scanning 250,000 essays in under an hour versus two minutes per human reader. The move saves 8,000 hours and lets the school notify applicants a month earlier.

VCs are "kingmaking" AI startups earlier than ever, flooding companies with massive rounds. DualEntry ($90M Series A), Rillet ($70M Series B), and Campfire AI ($65M Series B) all raised back-to-back rounds in the AI ERP space.

Trending Tools

🎬 Kling O1 - The world's first unified video model that handles generation and editing in one place with perfect character consistency.

🎵 AnyMelo - Create royalty-free music effortlessly and extend short tracks into complete compositions with AI.

🤖 Mistral 3 - Open-source multimodal models (3B to 675B parameters) with image understanding, all under Apache 2.0 license.

Until we Type Again…

Thank you for reading yet another edition of the Digestibly Newsletter!